Experiment with LLMs: Deploy a Llama3-Powered Chatbot onto Kubernetes on your Laptop!

Youcef GuichiMay 20, 2024

Youcef GuichiMay 20, 2024

In this blog post, we gonna run through how you can deploy your ml workloads on kubernetes and enable GPU acceleration for it.

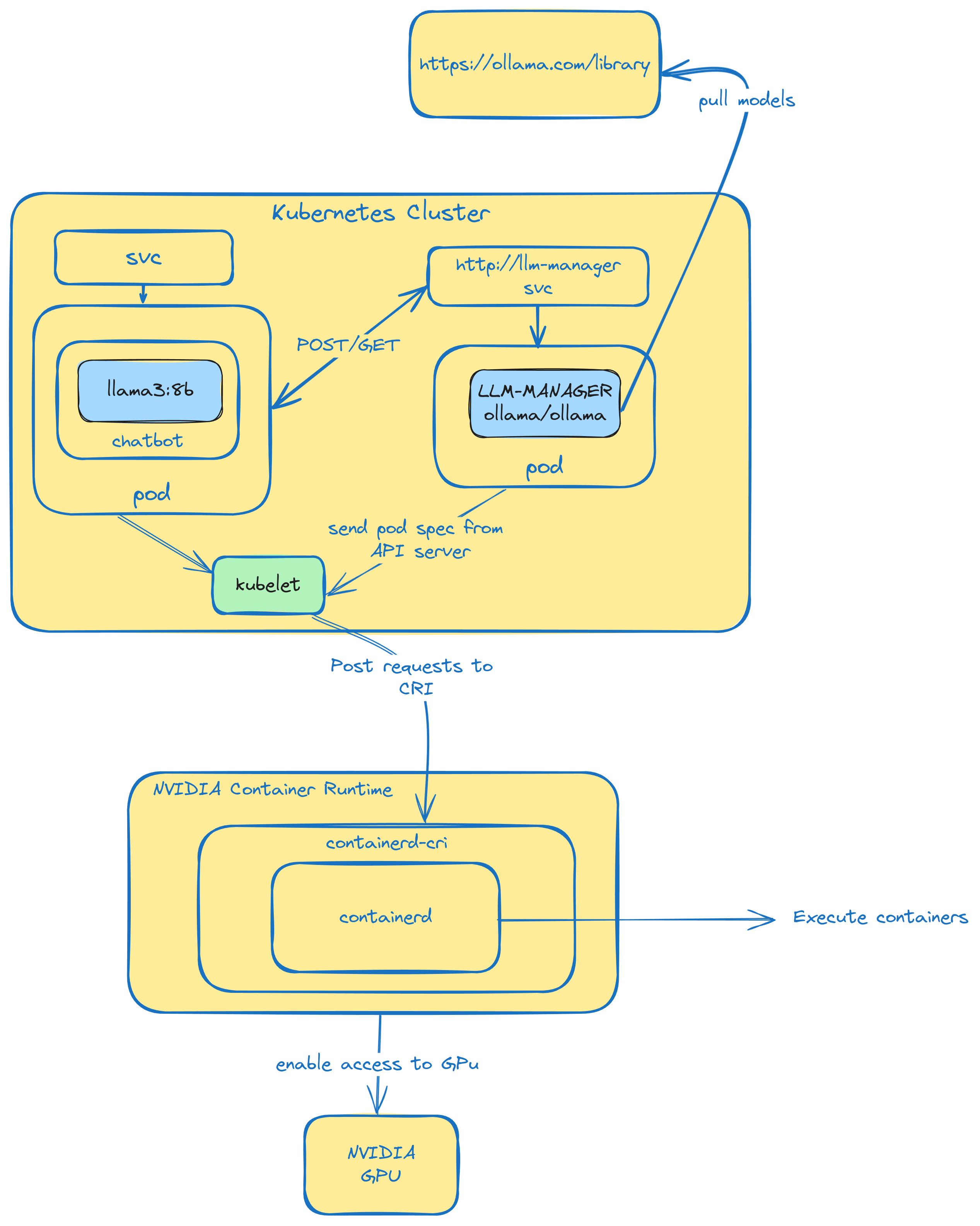

The diagram above shows a fun, at home experiment using nothing but ollama and your trusty dusty laptop.

Before we dig into the practical part, we want to explore what's happening under your kubernetes cluster. Some of us are always curious how things are handled behind the scenes. So, let us give you a VIP tour,

Shall we ?

What is Container Runtime

Container runtime, in a nutshell, is the piece of software that is responsible for executing containers and managing their lifecycle.

Container runtime handles tasks such as pulling container images, creating, starting containers, and managing the resources and isolation for each container.

Alright,with that said, what is the relation between your cluster, your pod, and the container runtime?

Patience, we are getting there in a moment!

Kubelet, Pod, CRI and Container Runtime

When you deploy your pod to your cluster, the kubelet (kubelet is a core component on kubernetes that is responsible for the whole pod lifecycle) will receive the pod spec from the cluster's API server.

In the podspec, we will have info like image, right? let's say the image is nginx, kublet will post a request to the container runtime to run a nginx image. How does kublet communicate with the container runtime? it uses what is called CRI (Container Runtime Interface).

CRI, is a standard api that allows kubelet to interact with the container runtime in a consistent manner.

After the kubelet submit the request to CRI, the container runtime will pull the image and run it based on the specification sent by the kubelet. Then, the kublet will receive back the status from the container runtime through CRI and display it. This means that the pod is running successfully and kublete can send termination requests and restarts .. etc

Does container runtime, like containerd, have the capabilities to use GPU natively? the simple answer is, No! Then, how do you enable GPU access to your workloads?

What is Nvidia Container Runtime ?

Nvidia Container Runtime is an extension that has capabilities of accessing GPU resources on the host machine. It serves as a wrapper for the container runtime. So, when your workload is running inside standard container runtime like containerd, it requests GPU acceleration. The extension will allow the container runtime to use the provided GPUs in the host machine.

can you guess the main character of this short story? you got it! it is container runtime, again!

Now, let us get our hands dirty, shall we?

Cluster Setup

First, we have to install nvidia container toolkit. It has nvidia container runtime along with other packages that provide access to GPUs. For k3s case, it uses conatinerd as the default container runtime.

Please check the official nvidia docs on how you can install the nvidia container toolkit, and configure it with containerd -> installation

Alright, now we can spin up our cluster. We chose to use k3s for this blog post, the installation is a one liner as follows:

curl -sfL https://get.k3s.io | sh -

Test if the cluster is up and running

➜ sudo k3s kubectl get nodes

NAME STATUS ROLES AGE VERSION

node-01 Ready control-plane,master 15d v1.29.4+k3s1If you want to get rid of the sudo k3s you can do the following:

sudo chmod 644 /etc/rancher/k3s/k3s.yaml

export KUBECONFIG=/etc/rancher/k3s/k3s.yaml

kubect get nodesYay!! our cluster is ready!

Deploy Ollama as a central llm-manager

Ollama is a new project that allows you to use llms like llama3 on your local computer. It is optimized for CPUs as well, and it automatically chooses the perfect fit for your workloads. In case your GPU memory is not enough, it will switch automatically to use CPU.

deploy with onechart

helm repo add onechart https://chart.onechart.dev && helm repo update

helm install llm-manager onechart/onechart \

--set image.repository=ollama/ollama \

--set image.tag=latest \

--set containerPort=11434 \

--set podSpec.runtimeClassName=nvidiaOllama expose port 11434 by default, we also set the runtimeClassName to nvidia to benefit from the nvidia container runtime for GPU acceleration workloads.

➜ kubectl get po,svc | grep llm-manager

pod/llm-manager-665765c8df-8pnz7 1/1 Running 3 (5h19m ago) 39h

service/llm-manager ClusterIP 10.43.240.248 <none> 80/TCP 4d22hIf we check the logs, we will see that ollama will detect a GPU if you have one.

In our case, it is an NVIDIA GeForce RTX 2060.

➜ kubectl logs llm-manager-665765c8df-8pnz7

time=2024-05-19T07:34:34.379Z level=WARN source=amd_linux.go:163 msg="amdgpu too old gfx000" gpu=0

time=2024-05-19T07:34:34.379Z level=INFO source=amd_linux.go:311 msg="no compatible amdgpu devices detected"

time=2024-05-19T07:34:34.379Z level=INFO source=types.go:71 msg="inference compute" id=GPU-2607f162-ae64-a3c2-13dc-0fe25020d136 library=cuda compute=7.5 driver=12.4 name="NVIDIA GeForce RTX 2060" total="5.8 GiB" available="5.7 GiB"For the next step, we will create a client pod that will interacts with the llm-manager.

Setup a Chatbot Powered by llama3 and Gradio

We already created a simple python code and packaged it under ghcr.io/biznesbees/chatbot-v0.1.0.

Please refere to the source code here: github/chatbot-v0.1.0

What this image do?

- It run a web interface powered by gradio

- It will post a request for the llm-manager to pull a certain llm model if it is not available

- Post text inputs

- Receive answers from the chosen llm

Let's deploy our chatbot and start doing some magic!

helm install chatbot onechart/onechart \

--set image.repository=ghcr.io/biznesbees/chatbot-v0.1.0 \

--set image.tag=latest \

--set vars.OLLAMA_HOST=http://llm-manager \The code anticipates an env var OLLAMA_HOST set to the llm-manager address since the chatbot will send requests there!

Let's port forward the chatbot to our local machine and access it through the browser.

➜ root git:(main) ✗ kubectl port-forward svc/chatbot 8080:80

Forwarding from 127.0.0.1:8080 -> 80

Forwarding from [::1]:8080 -> 80I think we are ready to test our chatbot baby!!

Run Experiments

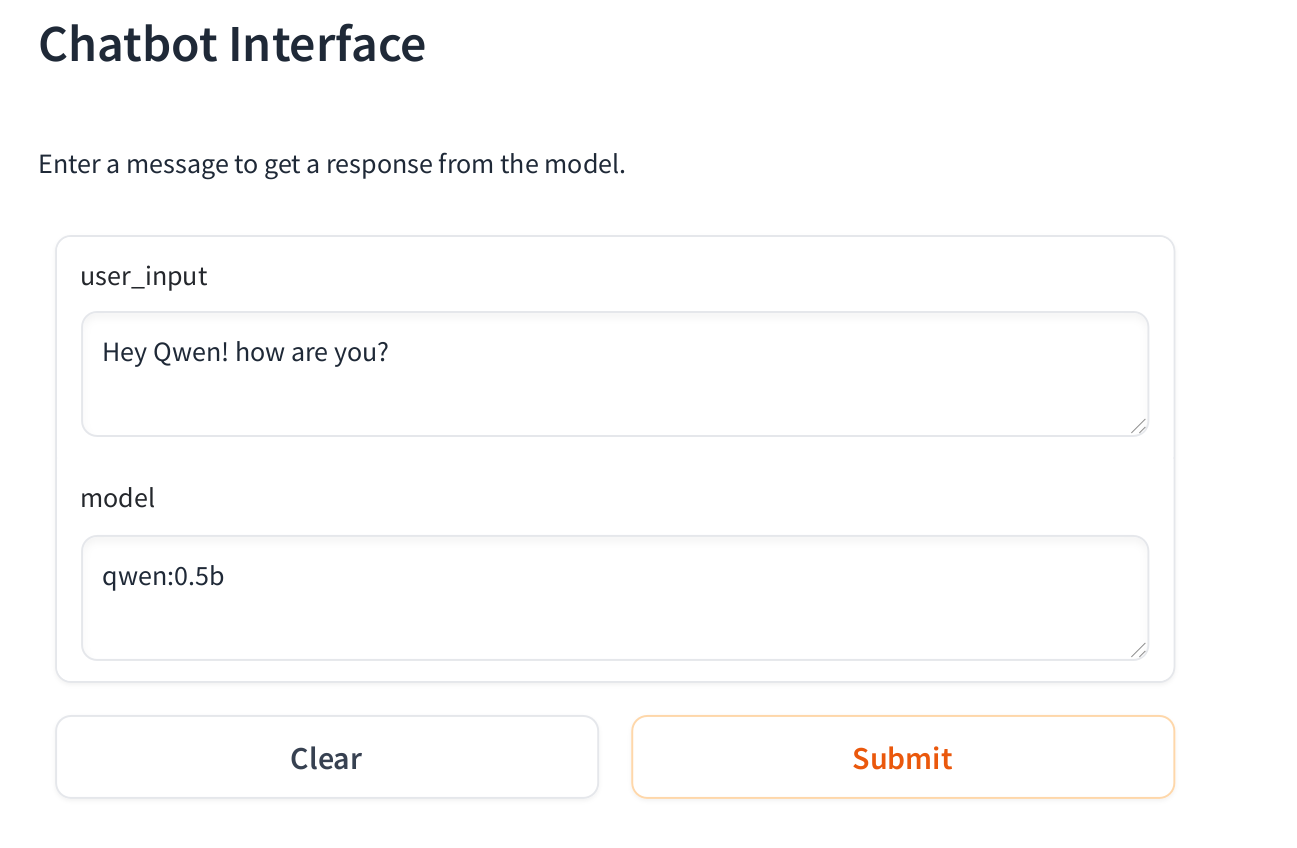

Alright, we gonna perform two experiments:

1. qwen:0.5b

As you see, we specified the Qwen Model with half billion parameters, When we hit submit, the llm-manager will download the model first if it doesn't exist.

We can check the logs of the llm manager

➜ kubectl logs llm-manager-665765c8df-8pnz7

time=2024-05-19T13:45:50.851Z level=INFO source=download.go:136 msg="downloading fad2a06e4cc7 in 4 100 MB part(s)"

time=2024-05-19T13:47:15.893Z level=INFO source=download.go:136 msg="downloading 41c2cf8c272f in 1 7.3 KB part(s)"

time=2024-05-19T13:47:19.053Z level=INFO source=download.go:136 msg="downloading 1da0581fd4ce in 1 130 B part(s)"

time=2024-05-19T13:47:22.141Z level=INFO source=download.go:136 msg="downloading f02dd72bb242 in 1 59 B part(s)"

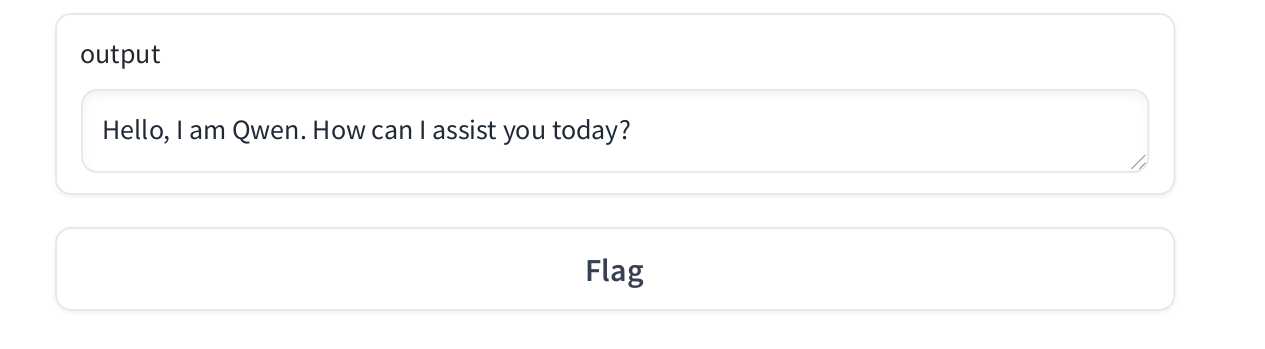

time=2024-05-19T13:47:25.217Z level=INFO source=download.go:136 msg="downloading ea0a531a015b in 1 485 B part(s)"After the download completes, we will get an answer from the qwen model as you see in the image; and it will use the provided GPU.

to check GPU usage type nvidia-smi

➜ nvidia-smi

Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=========================================================================================|

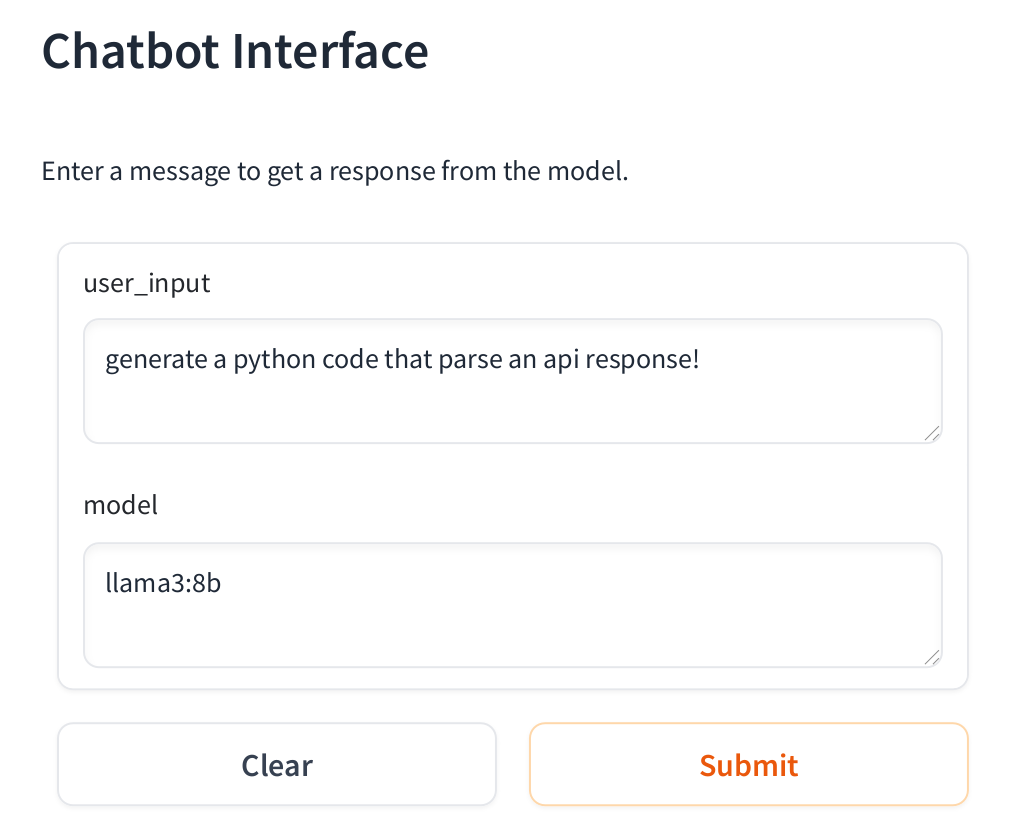

| 0 N/A N/A 348592 C ...unners/cuda_v11/ollama_llama_server 942MiB |2. llama:8b

Second option is to use llama3 with 8 billion parameters, since the GPU provided can't handle llama:8b, it will optimize the inference to use CPU.

We gonna replicate the same process, but this time, we gonna check CPU usage at inference time.

You can check the CPU usage with the top command and you will notice a huge increase in CPU utilisation.

➜ top

PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND

407091 root 20 0 5736224 4,7g 4,3g R 776,3 31,3 16:37.00 ollama_llama_seConclusion

On this blog post, we saw how we can easily deploy llm models with ollama and kubernetes. Also, we saw how to enable GPUs to accelerate workloads.